Supervised by M.Sc. Addis Dittebrandt

Download

GitHub Repository

Download

GitHub Repository

Abstract

As modern graphics hardware improves in the field of ray tracing acceleration, it becomes increasingly apparent

that it will be used more frequently in real-time applications.

Path tracing thus marks the current direction of research regarding modern real-time rendering.

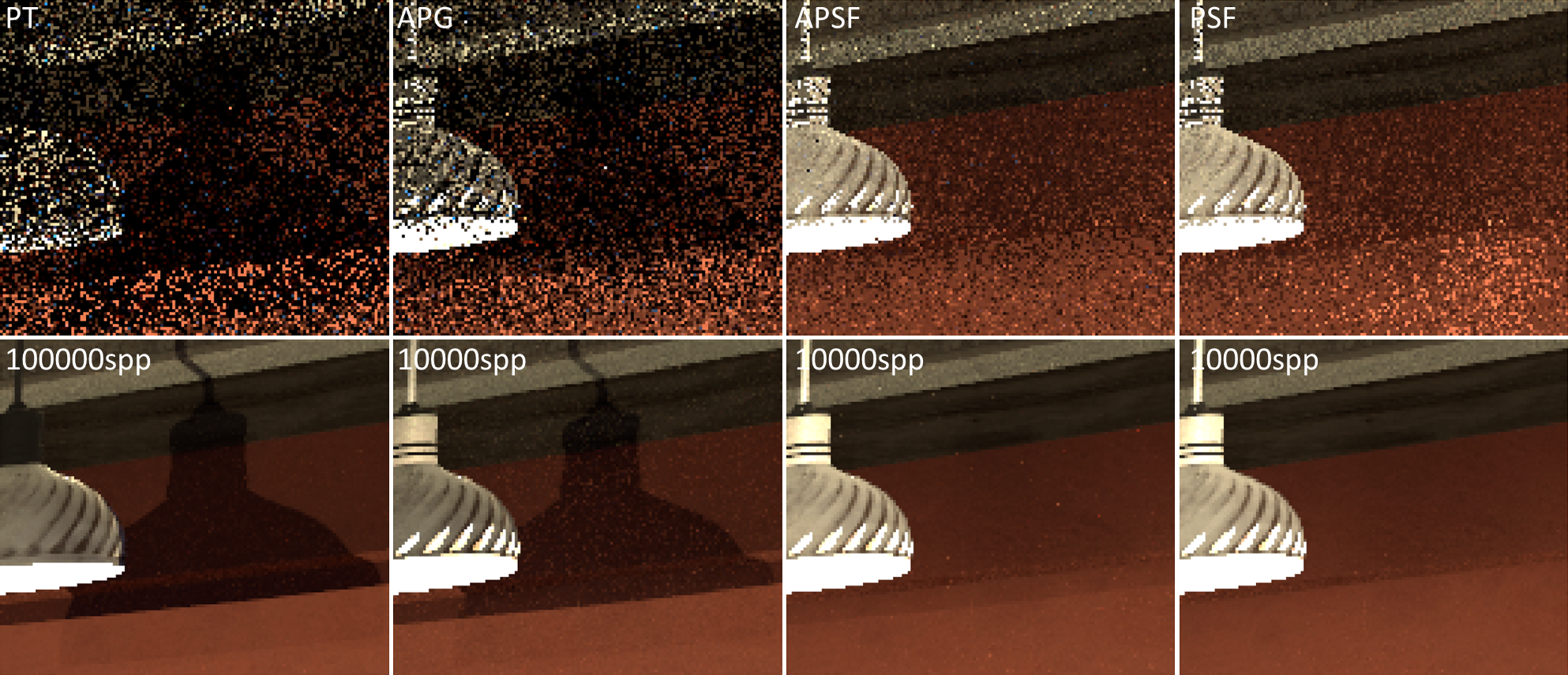

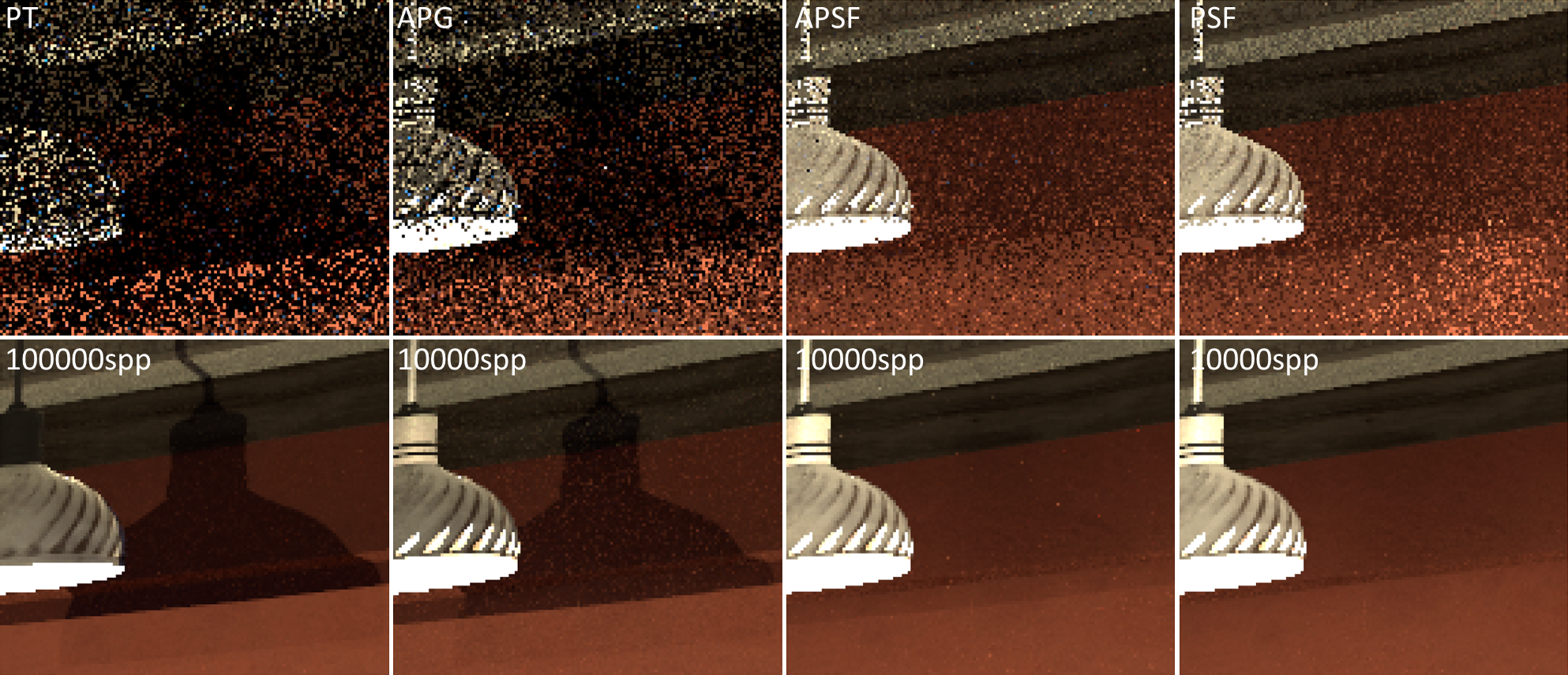

Even with modern hardware it is still not feasible to trace more than one path per pixel.

This leads to a severe amount of variance which manifests itself as noise.

One way to reduce that noise is by filtering. Filtering spatial regions results in the technique called path space filtering.

Filtering over spatial regions results in a biased estimator. The image quality is thus determined by a variance-bias trade-off.

This thesis introduces two techniques to control this variance-bias trade-off by

using the spatial structure provided by path space filtering to estimate the variance of a spatial region.

Based on these variance estimations this paper derives different ideas to both improve the image quality

and also improve frame times. The first technique introduces path survival and interpolation between path tracing and path space filtering

by analyzing the variance on the primary surface, hence the name adaptive path space filtering for primary surfaces.

It achieves to improve the frame times of path space filtering beyond the frame times of path tracing while also generating

better image quality in some cases. The second technique analyzes the variance along paths and terminates into a spatial cell

once the variance exceeds a given threshold. It is thus called adaptive path graphs. While being computationally heavy,

it generates interesting results regarding the variance-bias trade-off and can handle difficult situations,

especially if combined with the first technique.